Clouds and Security: can we trust remote computing as well as local computing?

In today's world, cloud services are integrally involved in many aspects of business and daily life. Along with the convenience and flexibility they provide, there comes an increase in risks in the area of information security. Data breaches, cyberattacks, and privacy violations are problems that cannot be ignored. Security in cloud services is not only a measure for protecting information but also a key element in strengthening trust and loyalty of users.

Now, all cloud platforms that are built on the Cloud Native principle use virtualization and containerization to implement microservice architecture. Therefore, here onwards, we will be talking about the security of virtual machines (VMs), understanding them to encompass full virtualization, paravirtualization, and containers (such as Docker). The ideal scenario for a cloud user would be to achieve the same (or nearly the same) security for their remote virtual machines as they would have when operating locally. How can this be achieved?

The discussion will proceed with technologies that are available now, but whose application still needs to find its niche.

State of the Art

The user can prepare a virtual machine (VM) or the entire architecture on their computers, encrypt it, and transfer it to the cloud for further operation. However, once the container starts working, it uses RAM, where data is processed in plain text and can be copied or altered. A particular risk is presented by "snapshots," which are widely used to capture the state of the container and consist of a copy of the RAM and CPU registers associated with that VM. When restoring (rolling back) the container to this state, the reverse process occurs – data from the file is loaded into RAM and registers.

This was the case until recently when AMD brought to market the SEV (Secure Encrypted Virtualization) technology, which allowed for encrypting data on the fly as it is transferred between the CPU and RAM and vice versa. This enables the creation of a seamless security context for the container – data is never in plain text. Leading vendors and virtualization communities, including VMware, Openstack, Kata (IBM), Qemu, and others, have supported this technology. A strategic partnership was concluded between Google and AMD to build secure trusted cloud services. Services like AWS and Azure are offered based on this technology. The list is more than authoritative.

The downsides of the technology stem from its implementation: a symmetric AES 128 cipher is used, and the encryption key is generated and stored in the CPU (in its special Secure Processor area). Difficulties begin to arise when the VM needs to be moved to another physical server. In VMware terms, these are:

- System Management Mode

- vMotion

- Powered-on snapshots (however, no-memory snapshots are supported)

- Hot add or remove of CPU or memory

- Suspend/resume

- VMware Fault Tolerance

- Clones and instant clones

- Guest Integrity

- UEFI Secure Boot

And these are the functions that we loved virtualization for: flexible automated DevOps, fault tolerance and disaster recovery due to VM migration to other servers and data centers, energy saving by concentrating working VMs on certain servers and turning off the rest, etc. Thus, increased security has led to real, not imaginary, constraints.

Along with the limitations in convenience, it is essential to remember the maximum load on the key – the volume of information encrypted with a single key (a large volume of data encrypted with one key can give an advantage to an attacker in brute-forcing the key). The CPU exchanges very significant volumes of data with RAM. Thus, it is necessary to change the key periodically and quite often. Is it possible to have it all at once?

Key Distribution and Security with Comfort

If the user of the VM – the owner of the data in it – could provide the CPU with the encryption key for the VM's memory, this would remove all the restrictions: when moving the VM to another CPU (server or data center), the user would again provide the necessary key and the VM could continue its work in the new location.

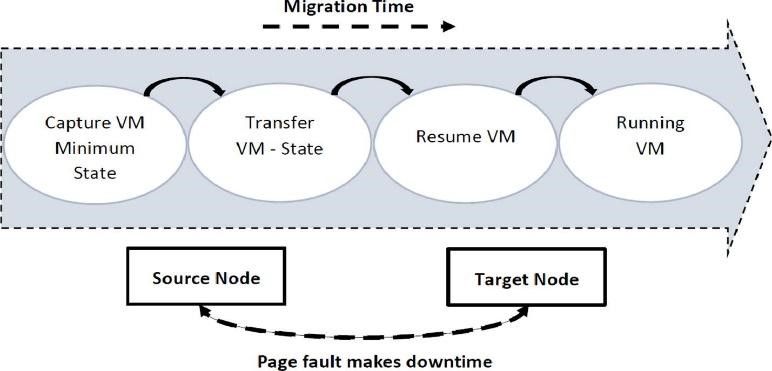

Migration steps:

- Creation of the VM state on the 1st server and recording it in shared storage (accessible to both servers).

- Starting the VM on the 2nd server with the state data from shared storage.

- Synchronization of the states of the two VMs over the network.

- Handing over control and data flow to the 2nd VM.

- Stopping the 1st VM.

- Post-procedures - deleting unnecessary files.

In this case, the VM state is transferred in encrypted form, as it was processed before, which provides a seamless security context without re-encryption and, consequently, the highest level of confidentiality and integrity. A similar process occurs when changing the encryption key, only then everything happens within one server and CPU. It is especially pleasing that now one does not have to worry too much about the security of backups, which for many years occupied “prize” places as a channel for the leakage of sensitive data: the backup will contain the encrypted state of the VM.

Key distribution is a classic task in symmetric encryption schemes (we remember that SEV uses AES - symmetric encryption). In the era of anticipating quantum supremacy, there is an additional requirement for quantum resistance - a property of an algorithm that does not give a significant advantage to a quantum computer over a traditional one. By the method of implementation, quantum-resistant algorithms are divided into quantum (laws and phenomena of physics are used) and post-quantum (mathematical operations are used). The former has a significant advantage - they have proven resistance, which will not change from the appearance of new computers or even scientific discoveries. The technology readiness is very high, and equipment is available on the market. The advantage of the latter is the simplicity of application; it is only necessary to change the key distribution algorithm. However, there is no ready-made product here yet - national standards or industry standards, only recently NIST selected candidates for this role in the US.

Quantum Key Distribution

Let's not delve into the principles of QKD here, for our task it will be sufficient to represent the QKD system as a distributed generator of truly random numbers: a random number is generated at point A and exactly the same number is generated at point B, points A and B are connected by a quantum channel.

Thus, the key can simultaneously be obtained by the user and on the server where the VM is created, and used for encryption. However, a scheme with the following algorithm is more preferable:

- The server receives a command from the user to create a VM.

- The CPU generates key (1) and creates a VM, encrypting the allocated RAM with this key.

- Simultaneously with step 2, the QKD procedure starts, as a result of which the user and the CPU will have identical secret keys (2).

- The CPU then performs an XOR operation (addition modulo 2) of keys (1) and (2) and sends the result to the user over any open channel.

In this sequence, the VM startup time can be slightly reduced - you do not need to wait for the QKD system to distribute the keys.

Another scenario is possible when the user prepares a VM locally, encrypts it, and then transfers it to the data center, where there is already a corresponding key transferred using QKD. This option seems to be more advantageous in terms of security: the data inside the VM does not appear in the open anywhere, that is, the principle of a seamless security context (end-to-end) is implemented.

In other cases (migration, backup and recovery, etc.), similar sequences will be implemented with their specifics. At the same time, the key registry is only with the user - the owner of the data, and temporarily inside the protected area of the CPU, which is reset when the voltage is turned off or the server is rebooted. Therefore, for each operation, the key will be requested from the user, and the operation becomes possible only after it is provided.

This solution also has its weaknesses:

- QKD uses the laws of physics and has physical limitations - about 100 km is the length of the quantum channel. There were statements about 1000 km, but commercial implementations are not available to date. It is expected that for a long time, the consumers of such secure services will be corporations that usually have their own service premises near data centers for physical access and maintenance of their equipment. So this will not be a significant limitation for them.

- QKD is expensive. Until recently, this was indeed the case with a price of around €100k per set. However, in our scenario, a system is needed that operates at short distances, which reduces the requirements for the level of equipment and, accordingly, the price. In addition to this, technologies are developing and IP circulation is increasing, which also leads to a price reduction. Therefore, in the very near future, a price of around €2-3k per server can be expected.

- The not-so-robust AES 128 cipher is used. This creates risks of key selection. As of today, this risk can be mitigated by changing keys more frequently; in the future, it is expected that AMD and other chipmakers will switch to more robust ciphers.

Despite the identified weaknesses of this solution, it is still a much higher level of security for virtual infrastructure with a high market readiness of the necessary components.

Assessment of Trends

Here it is important to clarify two questions: how far ahead of their time are the technologies described above, and where are they necessary.

During the preparation of the article, the authors' opinions ranged from “implement everywhere” to “why force it”.

As of today, SEV is a fairly niche technology and is unlikely to be needed by everyone. It has narrow areas of application, but clouds have been living without it for a long time and quite successfully. Implementing SEV everywhere would require reworking not only the functionality but also the properties offered by clouds, inventing a lot of “workarounds”, which would make everything complex and poorly maintainable. For example, machine migration, recovery from backups/snapshots (especially if the host machine has "bitten the dust"), hot redundancy, replication - all of this will be to varying degrees limited or impossible without going beyond the SEV concept. Not to mention the trivial vendor lock-in, as the technology is only available on AMD EPYC processors.

It is important to note that SEV technology will provide a different perspective on Edge computing and various “thick” embedded solutions (cars, for example). For operating hardware in an untrusted environment, this is a suitable technology. Unfortunately, the technology is not very ready for such applications, as it was initially positioned for server solutions and data centers. We hope that development will go in the right direction, and soon it will be possible to see it in these niches.

As for QKD, for now, the technology is an expensive extravagant toy. It has incredible characteristics and cool physics with mathematics inside, but there are limitations that are unlikely to allow its use for cloud technologies everywhere.

Realistic Scenarios

We have considered the integration of two top-notch (and therefore rare) technologies, which can be called the maximum achievable level of security in the cloud. Are there realistic scenarios when this is really necessary?

Open RAN. This is a concept of mobile operator infrastructure that has emerged from the combination of NFV, Cloud Native, and full openness of interfaces as an attempt to move away from proprietary mono-vendor solutions. There is a component here that manages NFV - MANO (Management and Orchestration), the compromise of which would mean the operator losing control over its network. This can also include components (VNFs) that implement security functions.

SoftHSM. A technology that virtualizes the Holy Grail of cryptography - HSM (Hardware Security Module). Today, HSM is a key component (in both literal and figurative senses) in the identification of a SIM card in a mobile operator's network and a plastic card in an ATM. It goes without saying that it is necessary to protect it “as much as possible”.

Compliance. There are situations when compliance with regulatory requirements is the highest priority, and when penalties for non-compliance can exceed the cost of the business or mean criminal prosecution. The richest company can go bankrupt from non-compliance with GDPR standards and subsequent personal data leaks.

Summary

AMD SEV technology significantly increases the level of security of virtual cloud infrastructure, bringing it closer to the case of local computing. The limitations that arise in this can be lifted by the application of Quantum Key Distribution (QKD).

The main alternative to QKD remains the application of post-quantum key distribution algorithms when they are standardized. It is important to remember that the resistance of quantum key distribution is mathematically proven, while post-quantum is not. For the latter, there is always a risk that in the future some algorithm or computation will be invented that will make it vulnerable.

Equipment implementing AMD SEV and QKD is already on the market, and its price will reach an acceptable level in the near future. Such a solution is applicable in cloud platforms, NFV, Open RAN - in any Cloud Native infrastructure.